Hadoop came into the picture to support the large amount of structural, semi-structural and non-structural data. Hive is the query engine for the Hadoop ecosystem and provides SQL interface in Hadoop. We can load the data in the Hadoop in different file format including text, parquet, Avro etc with the feasibility of compression techniques.

As Hadoop supports structural, semi-structural and non-structural data, a question arises is can we directly load all type of data to Hive and perform query analysis on that data. As Hive performs the operation on “Schema on Read” type, so loading data to Hive cannot be a problem. Whether you load the corrupted record, you created a table delimited on ‘^’ and load comma(,) separated data hive will allow us to load the data without any error and while querying on that data it will show all records in a single column. We will discuss this in details in further blogs. In this post, I am going to discuss how we can load the JSON data to Hive Table and Query on that table.

JSON data comes with a key-value pair. To learn about JSON schema you can go through the post:- JSON File Format. We need to use Hive SerDe’s to load the JSON data to Hive tables. This SerDe comes inbuilt with the Hadoop Ecosystem. You can check the jars in Hive lib folder. If the jar is not available in lib folder you can download the jar (Hive-SerDe) and load it to the Hive lib path. You can also create your custom SerDe. There are different ways of registering and putting the custom SerDe jars in the Hive lib path depending upon which Hadoop ecosystem you are using. For Cloudera, you can refer Hive UDF and Hive reloadable path posts.

I am referring below JSON schema to create a Hive table. This schema contains structure and Array.

While creating the table we need to check the schema of the JSON. As our schema is having a complex structure including struct and array of struct. We cannot directly write the create table statement as we used to do in case of simple Hive Table creation. Let’s break this JSON to a smaller one and start writing the table creation query.

- Struct Schema

As the above portion contains the struct type of schema. Start a tag struct with the name of the struct as emp. While creating the Hive table schema for the JSON query, we need to remember to take the same column name as of JSON file. For firstname we cannot use first_name in Hive table schema, else we will end with getting NULL values. Schema query will be:-

emp struct<firstname : string, lastname : string>

- Array Schema

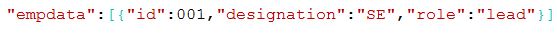

Next, we have the array of struct type under the Tag empdata. To accomplish this we need to create tag array followed by struct and our schema will look like

empdata array<struct< id : int, designation : string, role : string>>

- Simple schema

Last we have a simple schema. We need to write a column name followed by data type to accommodate this type of schema and our schema will look like:-

Country string, location string, pincode int

- SerDe to use

We need to pass the Serde with “ROW FORMAT” clause. I am using JsonSerDe and passing the values as

ROW FORMAT SERDE ‘org.apache.hive.hcatalog.data.JsonSerDe’

Our full query will be:-

create table if not exists json_table(

emp struct<firstname : string, lastname : string>,

empdata array<struct<id : int, designation : string, role : string>>,

company struct<name : string, Building : string>,

personal_record struct<Contact_number : int, email : string>,

country string,

location string,

pincode int

)

ROW FORMAT SERDE

‘org.apache.hive.hcatalog.data.JsonSerDe’;

After creating table next step be to load the JSON File to the Hive table, conventional methods using load data (by using local and HDFS path) command can be used to load data to hive table.

I have kept data at HDFS and using below command to load data:-

load data inpath ‘/user/json.json’ into table json_table;

After loading data to Hive table, we can perform a select * command or select specific columns from the table.

Select *

select * from json_table;

Select Columns

select emp.firstname, emp.lastname, empdata.id, location from json_table;

You can see in the above query, I have used emp.firstname instead of firstname, as we are using JSON complex structure, to map to the columns of the struct we need to use strcuct_column_name.column_name to fetch the desired value.

if you have Jason with a large number of columns and you need limited columns in your table, you can create Hive table with the required columns and also you can interchange the position of the columns, consider in above query you need to fetch country as the first column, you can modify the create table statement by writing country column in the beginning.

Your feedbacks are valuable, looking forward towards your feedbacks.